Solve issues that come with imbalanced datasets

As we mentioned before, understanding what our variables are and how they’re structured is only part of the process in ML. It’s also essential to understand the frequency in which the variables exist. For classification problems, we need to specifically understand the frequencies of the response variable. As a data professional, we might encounter datasets that are unequal in terms of their response variables.

One example of unequal datasets is in the context of fraud detection. We could have millions of examples of non-fraudulent transactions and only a few 1000 examples of actual fraudulent transactions. How can a model be built to detect fraud with such limited data to train the model?

This issue is known as class imbalance. A class imbalance is when a dataset has a predictor variable that contains more instances of one outcome than another. The class with more instances is called the majority class, while the class with fewer instances is called the minority class.

It’s extremely rare for a dataset to have a perfect 50-50 split of the outcomes. There is normally some degree of imbalance. However, this isn’t necessarily a problem, in most cases, a 70-30 or 80-20 split can be fine. Major issues only arise when the majority class makes up 90% or more of the dataset. We’ll only know if there’s an imbalance issue after the model is built.

There are two techniques that allow us to fix any potential issues, upsampling and downsampling. Both of them involved altering the data in a way that preserves the information contained in the data while removing the imbalance.

Downsampling

Downsampling involves altering the majority class by using less of the original dataset to produce a split that’s more even. The number of entries of the majority class decreases, leading to more of a balance.

We can use different techniques to achieve this, but generally, they’re all based on this concept: One technique is to do this randomly by selecting entries to remove, or we can follow a formula. For example, we can take the mean of two data points in the majority class, remove those data points and add the average data point.

Upsampling

Upsampling is the opposite of downsampling. Instead of reducing the frequency of the majority class, we artificially increase the frequency of the minority class.

Similar to downsampling, there are multiple ways we can achieve this. The simplest technique is called random oversampling, where random copies of data points in the minority class are copied and added back to the dataset. Or mathematical techniques can be used to generate non-identical copies, which are then also added to the dataset.

Downsampling or upsampling

If both upsampling and downsampling achieve the same result, we might be wondering which one to use. Most of the time, we won’t know which one is preferred until we’ve built the model and observed how it performs. However, there are some general rules we can follow regarding when to upsample and when to downsample.

Downsampling is normally more effective when working with extremely large datasets. If we have a dataset that has 100 million points but has a class imbalance, we don’t need all of that data to build a good model. We definitely don’t need the additional data that would come from upsampling.

Tens of thousands is a good rule of thumb, but ultimately this needs to be validated by checking that model performance doesn’t deteriorate as we train with less data.

Alternatively, upsampling can be better when working with a small dataset. If we’re working with a dataset that only has 10,000 entries, removing any of that data will more than likely have a negative impact on the model’s performance.

Balancing the data

Building models with both upsample data and downsample data will determine which technique is better in any given situation. Additionally, we’ll have to experiment with what sort of split our rebalancing achieves. Balancing the data so the classes are split 50-50, might not always be optimal. On the other hand, turning a 99-1 split into a 70-30 split might be fine.

Unaltered test data

In both cases, upsampling and downsampling, it is important to leave a partition of test data that is unaltered by the sampling adjustment. We do this because we need to understand how well our model predicts on the actual class distribution observed in the world that our data represents.

Consequences of Sampling

The first consequence is the risk of our model predicting the minority class more than it should. By class rebalancing to get our model to recognize the minority class, we might build a model that over-recognizes that class. That happens because, in training, it learns a data distribution that is not what it will be in the real world.

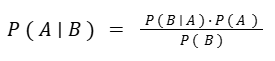

Changing the class distribution affects the underlying class probabilities learned by the model. Consider, for example, how the Naive Bayes algorithm works. To calculate the probability of a class, given the features, it uses the background probability of a class in the data.

In the numerator, P(A) (the probability of class A) depends on class probabilities encountered in the data. If the data has been enriched with a particular class, then this probability will not be reflective of meaningful real-life patterns, because it’s based on altered data.

When to do class rebalancing

Class rebalancing should be reserved for situations where other alternatives have been exhausted and we still are not achieving satisfactory model results. Some guiding questions include:

- How severe is the imbalance? A moderate (< 20%) imbalance may not require any rebalancing. An extreme imbalance (< 1%) would be a more likely candidate.

- Have you already tried training a model using the true distribution? If the model doesn’t fit well due to very few samples in the minority class, then it could be worth rebalancing, but you won’t know unless you first try without rebalancing.

- Do you need to use the model’s predicted class probabilities in a downstream process? If all you need is a class assignment, class rebalancing can be a very useful tool, but if you need to use your model’s output class probabilities in another downstream model or decision, then rebalancing can be a problem because it changes the underlying probabilities in the source data.

Disclaimer: Like most of my posts, this content is intended solely for educational purposes and was created primarily for my personal reference. At times, I may rephrase original texts, and in some cases, I include materials such as graphs, equations, and datasets directly from their original sources.

I typically reference a variety of sources and update my posts whenever new or related information becomes available. For this particular post, the primary source was Google Advanced Data Analytics Professional Certificate program.