Introduction to Feature Engineering

Feature engineering is the process of using practical, statistical, and data science knowledge to select, transform, or extract characteristics, properties, and attributes from raw data. Datasets used in the workplace can sometimes require multiple rounds of EDA and feature engineering to get everything in a suitable format to train a model. The three general categories of feature engineering are feature selection, transformation, and extraction.

Feature Selection

Feature selection selects the features in the data that contribute the most to predicting our response variable. In other words, we drop features that do not help in making a prediction.

Sample: A dataset with Outlook, Temp, Humidity, Windy features and Play Soccer as a target variable. Being windy outside might not affect playing soccer, so we drop the Windy column.

Feature Transformation

In feature transformation, we take the raw data in the data set and create features that are suitable for modeling, by modifying the existing features in a way that improves accuracy when training the model.

Sample: Our data might include exact temperatures but we might only need a feature that indicates if it’s hot, cold, or temperate.

Feature Extraction

Feature extraction involves taking multiple features to create a new one that would improve the accuracy of the algorithm.

Sample: We consider a new variable called “muggy” that could be used to model whether or not we play soccer. If the temperature is warm, and the humidity is high, the variable “muggy” would be True. If either temperature or humidity is low, then “muggy” would be False.

A side note

While we can make a lot of improvements by tweaking and optimizing the model, the most sizable performance increases often come from developing our variables into a format that will best work for the model. This often appears as needing to make an outcome variable binary. For example, we may get survey ratings from users that are on a scale of one to five stars, but we need to predict whether a piece of content is good or bad. In this case, we need to change our response variable by mapping the star ratings to either the good or bad label.

Explore feature engineering

We need to understand more about the considerations and process of adjusting our predictor variables to improve model performance. Feature engineering can vary broadly, but here we’ll discuss feature selection, feature transformation, and feature extraction.

When we’re building a model, we’ll often have features that plausibly could be predictive of our target, but in fact are not. Other times, our model’s features might contain a predictive signal for our model, but this signal can be strengthened if we manipulate the feature in a way that makes it more detectable by the model.

Feature engineering is the process of using practical, statistical, and data science knowledge to select, transform, or extract characteristics, properties, and attributes from raw data.

1. Feature Selection

Feature selection is the process of picking variables from a dataset that will be used as predictor variables for our model. Generally, there are three types of features:

Predictive: Features that by themselves contain information useful to predict the target.

Interactive: Features that are not useful by themselves to predict the target variable, but become predictive in conjunction with other features.

Irrelevant: Features that don’t contain any useful information to predict the target.

We want predictive features, but a predictive feature can also be a redundant feature. Redundant features are highly correlated with other features and therefore do not provide the model with any new information. For example, the steps we took in a day, may be highly correlated with the calories we burned. The goal of feature selection is to find the predictive and interactive features and exclude redundant and irrelevant features.

During the Plan phase

Once we have defined our problem and decided on a target variable to predict, we need to find features. Data professionals can spend days, weeks, or even months acquiring and assembling features from many different sources.

During the Analyze phase

Once we do an exploratory data analysis, it might become clear that some of the features we included are not suitable for modeling. This could be for a number of reasons. For example, we might find that a feature has too many missing or clearly erroneous values, or perhaps it’s highly correlated with another feature and must be dropped so as not to violate the assumptions of our model.

During the Construct phase

When we are building models, the process of improving our model might include more feature selection. At this point, the objective usually is to find the smallest set of predictive features that still results in good overall model performance. Data professionals will often base final model selection not solely on score, but also on model simplicity and explainability. A model with an R2 of 0.92 and 10 features might get selected over a model with an R2 of 0.94 and 60 features. Models with fewer features are simpler, and simpler models are generally more stable and easier to understand.

When data professionals perform feature selection during the Construct phase, they typically use statistical methodologies to determine which features to keep and which to drop. It could be as simple as ranking the model’s feature importances and keeping only the top a% of them. Another way of doing it is to keep the top features that account for ≥ b% of the model’s predictive signal.

2. Feature Transformation

Feature transformation is a process where we take features that already exist in the dataset, and alter them so that they’re better suited to be used for training the model. Data professionals usually perform feature transformation during the Construct phase, after they’ve analyzed the data and made decisions about how to transform it based on what they’ve learned.

2.1 Log normalization

There are various types of transformations that might be required for any given model. For example, some models do not handle continuous variables with skewed distributions very well. As a solution, we can take the log of a skewed feature, reducing the skew and making the data better for modeling. This is known as log normalization.

Suppose we had a feature X1 whose histogram demonstrated the following distribution:

This is known as a log-normal distribution. A log-normal distribution is a continuous distribution whose logarithm is normally distributed. In this case, the distribution skews right, but if we transform the feature by taking its natural log, it normalizes the distribution:

Normalizing a feature’s distribution is often better for training a model, and we can later verify whether or not taking the log has helped by analyzing the model’s performance.

2.2 Scaling

Scaling is when we adjust the range of a feature’s values by applying a normalization function to them. Scaling helps prevent features with very large values from having undue influence over a model compared to features with smaller values, but which may be equally important as predictors.

There are many scaling methodologies available. Some of the most common include:

2.2.1 Normalization

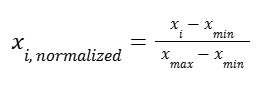

Normalization (e.g., MinMaxScaler in scikit-learn) transforms data to reassign each value to fall within the range [0, 1]. When applied to a feature, the feature’s minimum value becomes zero and its maximum value becomes one. All other values scale to somewhere between them. The formula for this transformation is:

Suppose we have feature 1, whose values range from 36 to 209; and feature 2, whose values range from 72 to 978:

Scatterplot of two unscaled features, each on its own horizontal axis. #1 is bunched in lower values. #2 is spread out.

It is apparent that these features are on different scales from one another. Features with higher magnitudes of scale will be more influential in some machine learning algorithms, like K-means, where Euclidean distances between data points are calculated with the absolute value of the features (so large feature values have major effects, compared to small feature values). By min-max scaling (normalizing) each feature, they are both reduced to the same range:

Scatterplot of two normalized features, each on its own horizontal axis. Both features are fully distributed between 0 and 1.

2.2.2 Standardization

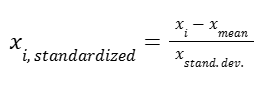

Standardization (e.g., StandardScaler in scikit-learn) transforms each value within a feature so they collectively have a mean of zero and a standard deviation of one. To do this, for each value, subtract the mean of the feature and divide by the feature’s standard deviation:

This method is useful because it centers the feature’s values on zero, which is useful for some machine learning algorithms. It also preserves outliers, since it does not place a hard cap on the range of possible values. Here is the same data from above after applying standardization:

Scatterplot of two standardized features, each on its own horizontal axis. Both features are distributed between -1.49 and 1.79

Notice that the points are spatially distributed in a way that is very similar to the result of normalizing, but the values and scales are different. In this case, the values now range from -1.49 to 1.76.

2.3 Encoding

Variable encoding is the process of converting categorical data to numerical data. Imagine a dataset with a feature called “Geography”, whose values represent each customer’s country of residence: France, Germany, or Spain. Most machine learning methodologies cannot extract meaning from strings. Encoding transforms the strings to numbers that can be interpreted mathematically.

The “Geography” column contains nominal values, or values that don’t have an inherent order or ranking. As such, the feature would typically be encoded into binary. This process requires that a column be added to represent each possible class contained within the feature.

| Geography | Is France | Is Germany | Is Spain |

| France | 1 | 0 | 0 |

| Germany | 0 | 1 | 0 |

| Spain | 0 | 0 | 1 |

Tools commonly used to do this include pandas.get_dummies() and OneHotEncoder() from sklearn. Often methods drop one of the columns to avoid having redundant information in the dataset.

| Customer | Is France | Is Germany |

| Antonio García | 0 | 0 |

Information isn’t lost by doing like above, then we know this customer is from Spain.

But some features may be inferred to be numerical by Python or other frameworks but still represent a category. For example, suppose we had a dataset with people assigned to different arbitrary groups:

| Name | Group |

| Rachel Stein | 2 |

| Ahmed Abadi | 2 |

| Sid Avery | 3 |

| Ha-rin Choi | 1 |

The “Group” column might be encoded as type int, but the number is really only representative of a category. Group 3 isn’t two units “greater than” group 1. The groups could just as easily be labeled with colors. In this case, we could first convert the column to a string, and then encode the strings as binary columns. This is a problem that can be solved upstream at the stage of data generation: categorical features (like a group) should not be recorded using a number.

A different kind of encoding can be used for features that contain discrete or ordinal values. This is called ordinal encoding. It is used when the values do contain inherent order or ranking. For instance, consider a “Temperature” column that has values of cold, warm, and hot. In this case, ordinal encoding could reassign these classes to 0, 1, and 2.

| Temperature | Temperature (Ordinal encoding) |

| cold | 0 |

| warm | 1 |

| hot | 2 |

This method retains the order or ranking of the classes relative to one another.

3. Feature Extraction

Feature extraction involves producing new features from existing ones, with the goal of having features that deliver more predictive power to our model. While there is some overlap between extraction and transformation informally, the main difference is that a new feature is created from one or more other features rather than simply changing one that already exists.

Consider a feature called “Date of Last Purchase,” which contains information about when a customer last purchased something from the company. Instead of giving the model raw dates, a new feature can be extracted called “Days Since Last Purchase.” This could tell the model how long it has been since a customer has bought something from the company, giving insight into the likelihood that they’ll buy something again in the future. Suppose that today’s date is May 30th, extracting a new feature could look something like this:

| Date of Last Purchase | Days Since Last Purchase |

| May 17th | 13 |

| May 29th | 1 |

| May 10th | 20 |

| May 21st | 9 |

Features can also be extracted from multiple variables. Consider modeling if a customer will return to buy something else. In the data, there are two variables: “Days Since Last Purchase” and “Price of Last Purchase.” A new variable could be created from these by dividing the price by the number of days since the last purchase, creating a new variable altogether.

| Days Since Last Purchase | Price of Last Purchase | Dollars Per Day Since Last Purchase |

| 13 | $85 | $6.54 |

| 1 | $15 | $15.00 |

| 20 | $8 | $0.40 |

| 9 | $43 | $4.78 |

Sometimes, the features that we are able to generate through extraction can offer the greatest performance boosts to our model. Finding good features from the raw data can be a trial and error process.

As a summary:

- Feature Selection is the process of dropping any and all unnecessary or unwanted features from the dataset.

- Feature Transformation is the process of editing features into a form where they’re better for training the model.

- Feature Extraction is the process of creating brand new features from other features that already exist in the dataset

Disclaimer: Like most of my posts, this content is intended solely for educational purposes and was created primarily for my personal reference. At times, I may rephrase original texts, and in some cases, I include materials such as graphs, equations, and datasets directly from their original sources.

I typically reference a variety of sources and update my posts whenever new or related information becomes available. For this particular post, the primary source was Google Advanced Data Analytics Professional Certificate program.